Greetings, Gumshoes!

Welcome to the second post in a series on being a Misinformation Sleuth. In our last installment, we introduced two frameworks for recognizing misinformation and used lateral reading to do a background check, i.e., vet information sources for possible biases before engaging deeply with the information. One of these aforementioned frameworks is Stanford's Civics Online Reasoning (COR), which is built on three questions:

- Who's behind the information?

- What's the evidence?

- What do other sources say?

The second framework is Professor Mike Caulfield's four-part SIFT methodology, which stands for Stop, Investigate the source, Find trusted coverage, and Trace claims to their origin.

Doing a background check by lateral reading is just one element of misinformation sleuthing. Maybe the author or publisher of the information doesn't raise a red flag, or maybe there's not enough information out there to tell if a source is credible (for example, the person posting the information may not be a public figure). No one source of information is infallible, so it's important that any factual claims are supported by solid evidence.

Work the case

The keyword in the above sentence is "solid," which, according to COR, means evidence that is both reliable and relevant. As we noted with lateral reading, any resource is unlikely to make itself look bad or less than credible, and research has shown that even highly educated people can fall for bad information in an appealing package like a nice website design. When information is paired with a visual element like a photo or a video, research indicates that people are more likely to take the information at face value.

My favorite example of this was in the spring of 2020, when much of the world was in lockdown as a response to COVID-19 and reports of wild animals making themselves at home in empty streets and waterways popped up on social media. But sadly, most of the feel-good stories of swans, dolphins, and others were faked (except these Welsh goats).

Most people sharing these posts had good intentions of wanting to share a little joy in a universally trying time. But even with the best intentions, anyone sharing these posts still contributed to the problem of spreading misinformation. These posts (and their more nefarious brethren) rely on a common trick that requires no image editing skills or software: the visual components were removed from their original context and paired with a misleading or false claim.

As Mike Caulfield, the architect of SIFT, wrote in his original blog post introducing the concept: his framework allows information consumers to "reestablish the context that the web so often strips away, allowing for more fruitful engagement with all digital information." (And in my experience, this statement also applies to the COR framework.)

I came across an example that really illustrates this point on Snopes, examining the claim that there is a rising trend of fires at food processing plants. The post includes image where all the headlines are shown together, creating an impression that there may be something sinister underpinning these fires. However, when Snopes investigators looked into it, they found that the evidence provided fell apart like a wet paper bag: the original post's examples of fires were spread over more than a year, some buildings were not operational or not full-fledged food processing plants, none of the fires were suspected to be arson, and a couple dozen fires accounts for less than 1% of the hundreds of food processing plants in the US. In short: the only suspected arson here is someone fanning the flames of online misinformation.

The plot thickens

Using out-of-context images is something almost anyone can do, but it doesn't stop there. Photo and video editing tools abound as well, adding a level of sophistication to misinformation that's even harder to spot. Doctored videos (also known as "deepfakes") have become part of the misinformation landscape, especially in the political world. A few weeks after Russia began invading Ukraine, a Ukrainian news site was hacked to show a deepfake of President Volodymyr Zelenskyy telling his fellow Ukrainians to surrender to Russia.

In this case, the deepfake wasn't a very good one; even those who can't identify the wrong accent would notice that the body is motionless. But if an image or video is less easy to debunk, there are ways to use tools like Google Images to try to uncover the original source of images. This is a process called "reverse image searching" and it uses search engines or databases full of images to compare across resources. Snopes has a helpful guide for not just conducting a reverse image search, but also clues to which images might be manipulated. This Snopes post from last week also shows the process in action.

For another deep dive into this process from the perspective of a professional fact-checker working at Reuters, you can watch this video from the News Literacy Project.

And finally: with so much information all around us, trying to verify everything you see is a huge undertaking for an individual. We'll look more closely at leveraging trusted information sources in the next installment of this series. But for now, suffice it to say that there are professionals who do the work of fact-checking and reverse image searching for you. And often, using lateral reading skills will reveal that someone who fact-checks for a living has already done the work.

Are you too close to the case?

In the previous post in this series, we learned through lateral reading that a nonprofit website questioning the effects of increased carbon dioxide in the atmosphere was funded by major fossil fuel companies. Doing a background check reminds us that information creators have biases that color what they say, whether it comes from their political opinions, their funding sources, or even a desire to sow chaos.

But no one is without bias: not the people creating information and definitely not the people consuming information. One insidious obstacle to our misinformation sleuthing is confirmation bias, which the News Literacy Project defines as "the tendency to search for, interpret and recall information in a way that supports what we already believe."

The nonprofit Facing History and Ourselves created a short video that addresses bias in media portrayals, both in which material journalists choose to cover and how information consumers interpret information. The video's first interviewee is a photographer who captured police officer firing a tear gas canister during the Ferguson protests in 2014. Although the photo was paired with a short, descriptive caption, some people viewed it an illustration of police dedicated to protecting a community, while others viewed it as emblematic of an aggressive, militarized police force. In other words, when people viewed an image paired only with a factual description, they projected their own worldview and values onto that image. View the full video.

So remember: when you're using your sleuthing skills to try to establish context and verify information that you see online, make sure you're taking time for some valuable self-reflection.

If you'd like to continue this series, read Part Three, or go back to Part One.

If you'd like to learn more about these and other skills to help you spot and stop misinformation, please register for the next session of our online program "How to Spot Misinformation," which will be held the evening of May 18. In the meantime, if you need to get in touch with a librarian on this or any other topic, just ask!

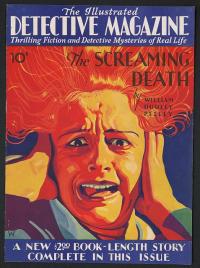

This image used for this blog post was found searching the Library of Congress Print & Photographs Online Catalog.